Technical Overview

Here you can explore in-depth information related to the history of the KIP survey, content development, survey methodology and validation studies. Click on any of the four questions below to learn more.

What is the KIP Survey? Why was the KIP Survey developed and what is it used for?

The Kentucky Incentives for Prevention (KIP) Survey provides bi-annual population surveillance data for schools, communities and state-level planners to use in their prevention efforts. The KIP Survey is Kentucky’s largest source of data related to student use of alcohol, tobacco, and other drugs (ATOD), as well as a number of factors related to potential substance abuse. In 2016, over 110,000 students representing 149 school districts completed the survey, and the information gathered provided an invaluable substance abuse prevention tool for those communities. Districts utilize their KIP results extensively for grant-writing purposes, prevention activities, and various other needs related to program planning.

In 1997 Kentucky was one of the first five states (along with Illinois, Kansas, Oregon, and Vermont) to receive a State Incentive Grant (SIG) from the federal Center for Substance Abuse Prevention. The Kentucky SIG, called the Kentucky Incentives for Prevention (or KIP) Project, had as its goals (1) strengthening the state youth substance abuse-prevention system, (2) strengthening the prevention systems within local communities that receive funding, and (3) increasing the health and well-being of youth 12 to 17 years of age by reducing risk factors, increasing protective factors, and reducing the use of alcohol, tobacco, and other drugs.

As part of this initiative, the KIP Survey has been administered bi-annually during even- numbered years in the autumn timeframe. KIP is operated by the Substance Abuse Prevention Program of Kentucky’s Cabinet for Health and Family Services, in collaboration with individual school districts across the Commonwealth. The survey is offered free-of-charge to all school districts in the Commonwealth. The intent of the survey is to anonymously gauge student use of alcohol, tobacco, and other drugs (ATOD) as well as factors research has shown to be correlated with youth substance misuse and abuse (e.g., peer influences, perception of risk, school safety). Since 2006, questions addressing additional illicit substances, bullying, gambling, mental health, and relationship violence have been added to the survey (see description of item evolution, pages 5-6). These supplementary items were designed by a consensual process involving school personnel, community prevention planners, and state officials. In its totality, the survey is intended to assist school, community, and state-level planners in their prevention efforts through periodic population surveillance.

School district and individual student participation in KIP have always been on a voluntary basis. In 2016 the population of students surveyed in grades 6, 8, 10, and 12 numbered approximately 111,700. Participating students came from 113 of 120 Kentucky counties and 149 of 173 public school districts. The strength of school and student participation lends credence to the representativeness and value of the biannual results as a population-level snapshot of youth substance misuse and abuse.

Kentucky’s KIP Survey was developed and validated in 1999 by researchers from the Pacific Institute for Research and Evaluation (PIRE), in partnership with the Center for Substance Abuse Prevention (CSAP) at the Substance Abuse and Mental Health Services Administration (SAMHSA). Subsequent to the initial development of the KIP Survey, REACH of Louisville was contracted by the state to provide a range of evaluation and consultation services, which included the bi-annual administration and analysis of KIP results.

How is KIP administered, scored, protected, and disseminated?

Survey administration

Classroom administration of the paper survey (including distribution, giving instructions, completing the survey, and collecting the survey) takes between forty and fifty minutes. Classroom administration of the web-based survey takes slightly less time.

School districts have some flexibility as to when to administer the survey within an approximate 6-week window beginning in the month of October, and results are scanned, tabulated and reported in three to four months following administration.

District-level results are reported only to the school district and not released in a public report.

The fact that the KIP Survey has been administered since 1999 within Kentucky enables comparisons over time.

There is no cost to the individual districts (costs are paid by the Substance Abuse Prevention Program, Cabinet for Health and Family Services).

Extensive efforts go into assuring the anonymity of students who complete the brief survey, and to insuring that no student feels coerced to participate.

The KIP Survey utilizes a passive consent model.

All KIP Survey materials, including parental notification letters and opt-out forms, the KIP Fact Sheet, survey instructions and the survey form itself, are available in Spanish.

To minimize the burden on KIP Coordinators, efforts have been taken over the years to make the process as seamless as possible. At the end of each survey period, all Coordinators are asked to complete a follow-up survey about their experience and to offer any suggestions for improving current methods. This valuable feedback is incorporated to the greatest possible degree, and has resulted in updates to the training manuals, shipping procedures and survey content.

Security, data retention and data destruction are handled in the following ways.

When paper surveys are used, students are directed to place their completed survey into an individual envelope and seal the envelope. The sealed envelopes containing individual surveys are bundled and labeled by school, then packaged into a single shipment from the district. The KIP Coordinator utilizes the KIP Survey Return Form (provided in the KIP training manual, or a link to a web-based version is accessible through our website) to alert REACH that their survey boxes have been weighed and measured, and are ready for pickup. REACH then emails prepaid shipping labels to the KIP Coordinator. The survey boxes are shipped directly from the school district to the survey scanning company.

Paper surveys are kept in a locked facility on REACH property for up to two years after the date of administration, at which point they are shredded.

KIP electronic data files are stored on a secure server accessible only to key REACH staff who have permission to access the files.

Each participating school district receives the following:

A comprehensive training manual

A set of preliminary cross-tabulations

A district-level report including comparisons with the region, the rest of the state and the US (when available)

A district-level trend report, showing within-district trend data for applicable questions from the current administration back to 2004

A report synthesizing the core-measure items required to be submitted by all Drug Free Communities (DFC) grant recipients as a component of the federal DFC National Cross-Site Evaluation

Interested school districts also have the option to order supplementary analyses of their report based on gender, school building, combinations of schools, or other desired domains.

For public consumption, REACH of Louisville produces:

A State and Regional Data Report, comprised of maps and graphs showing regional ATOD trends for each of Kentucky’s fourteen Regional Prevention Centers (RPCs)

A Statewide Trends report, showing statewide trend data for applicable questions from the current administration back to 2004

State and regional KIP reports are available at: http://reachevaluation.com/projects/kentucky-incentives-for-prevention-kip-survey/

The KIP data website developed for use by KIP Coordinators is at: http://reachevaluation.com/kip/ This page includes both training manuals, pdf’s of all required forms and materials, and a comprehensive FAQ list and contact information for assistance.

Consent model

Because the KIP Survey includes respondents that are minors, informed consent from parents is an important (and required) component of participation. School districts must explicitly agree to obtain informed consent. Surveys of student populations historically obtain parental consent in one of two ways, (1) passive (i.e. parent actively opts their child out) or (2) active (i.e. parent actively provides written permission to participate). The KIP Survey uses a passive consent process that balances the need to protect students and families from intrusive questioning about sensitive topics and a compelling need for schools and communities to get accurate and useful information about substance use, mental health and school safety for program development and the protection of children.

The KIP Survey includes a number of procedures and safeguards that serve to provide informed consent to both parents and students about their participation in the survey. The primary protections that are built into the KIP Survey process include: (1) multiple steps to insure that parents and students understand that their participation is voluntary, totally anonymous and non-coerced and (2) data aggregation and reporting formats that preserve anonymity.

A review of the passive consent process used with the KIP Survey shows that it is consistent with applicable state and federal laws and regulations, including the (a) No Child Left Behind (NCLB), (b) the Protection of Pupil Rights Amendment (PPRA), and (c) the Family Education Right to Privacy Act (FERPA). (available on request)

How has the KIP Survey changed over time? Who has been involved in ongoing item development?

Many of the core items on KIP were originally chosen by the Federal Center for Substance Abuse Prevention (CSAP), based on extensive research regarding prevalence, risk, and resilience in youth substance abuse. Basing the survey on the Federal model was intended to enable comparisons to other states and to the nation, while at the same time addressing needs in Kentucky and enabling within-state comparisons. The core items are similar (and sometimes identical) to several national-level instruments designed for this purpose, such as Hawkins and Catalano’s Communities that Care Youth Survey (CTCYS)1, the CDC’s Youth Risk Behavior Surveillance System (YRBSS)2, and Monitoring the Future (MTF)3. There is a substantial research literature supporting the efficacy of these items and forms of measurement for population-level surveillance in prevention planning.

The original KIP Survey instrument was developed in 1999, and remained static until the 2006 administration. REACH of Louisville has been the contractor in charge of KIP Survey administration since 2003; prior administrations were coordinated by the Pacific Institute for Research and Evaluation’s (PIRE) Louisville Center. Since 2006, questions addressing additional illicit substances, bullying, mental health, gambling and other Kentucky-specific items have been added to the survey (see below).

KIP Survey content is continually assessed for suitability, utility and benefit. Every effort is made to ensure survey items are current and relevant. Consideration is given to recent national trends, cultural shifts, demographic considerations, slang terminology, updates to comparable national surveys, and emerging research and priorities identified by the Substance Abuse and Mental Health Services Administration (SAMHSA.)

Revisions to the survey involve in-depth consultation and guidance from multiple agencies/entities, including:

- Kentucky’s Department for Behavioral Health, Developmental and Intellectual Disabilities

- Kentucky’s Department for Behavioral Health, Developmental and Intellectual Disabilities

- Members of Kentucky’s State Epidemiological Outcomes Workgroup (SEOW)

- Evaluators of SAMHSA’s Drug Free Communities (DFC) grant initiative

- Directors of Kentucky’s 14 Regional Prevention Centers

- KIP Survey Coordinators, individuals designated by each school district to manage school-level administration of the survey

In 2006, notable updates to the survey included the addition of several questions to address gambling, the elimination of a series of questions devoted to family, and amendments to create a distinction between methamphetamines and other types of stimulants/uppers.

In 2012, questions were added to address past 30-day psychological distress (specifically, the Kessler Psychological Distress Scale/K-6 scale, as utilized in the CDC’s Behavioral Risk Factors Surveillance Survey (BRFSS) and SAMHSA’s National Survey on Drug Use and Health (NSDUH)), and perception of peer disapproval of alcohol, tobacco, marijuana and prescription drug abuse.

Several significant adjustments were made to the KIP Survey instrument in 2014. Questions were added to address:

- heroin use and perception of risk associated with heroin use

- novel tobacco products (e.g., electronic cigarettes, hookah, dissolvable products)

- synthetic marijuana

- steroids

- prescription drug diversion

- bullying and online bullying

- relationship violence

- self-harm

- suicide

- family member in the military

1 https://store.samhsa.gov/product/Communities-That-Care-Youth-Survey/CTC020

2 https://www.cdc.gov/healthyyouth/data/yrbs/index.htm

3 http://monitoringthefuture.org/

Is the KIP Survey science-based?

Science of substance abuse prevention

Efforts to prevent substance abuse in Kentucky are grounded in the field of prevention science. While the concepts of prevention and early intervention are long-standing in public health, community psychology, and related fields, it has only been in the past three decades that scientific knowledge and methodology have evolved such that findings can be usefully related to substance abuse programs and practices (Hawkins, Catalano, & Arthur, 2002)4. Prevention science is integrally related to on-going work in the areas of health promotion and behavioral risk reduction (Jason, Curie, Townsend, Pokorny, Katz, & Sherk, 2002)5. Fundamental to this emerging science of substance abuse prevention are certain core concepts. All are predicated on the belief that it is crucial to have clear estimates of the prevalence and incidence of substance abuse for various populations and settings (such as the KIP Survey) in order to gauge change and the effectiveness of prevention and treatment programs. Some of the key findings in prevention science include:

There are a variety of developmental pathways to substance misuse and abuse.

Early childhood developmental and family factors can play a substantial role in creating subsequent vulnerability.

The eventual emergence of substance abuse is facilitated or ameliorated by specific risk and resilience factors, which can also serve as targets for intervention in school and community settings.

Substance abuse prevention efforts need to be systemic in orientation, and have been shown to be effective in family, school, peer group, mass media, or community contexts (or combinations of these).

Initial KIP Survey Validation Study

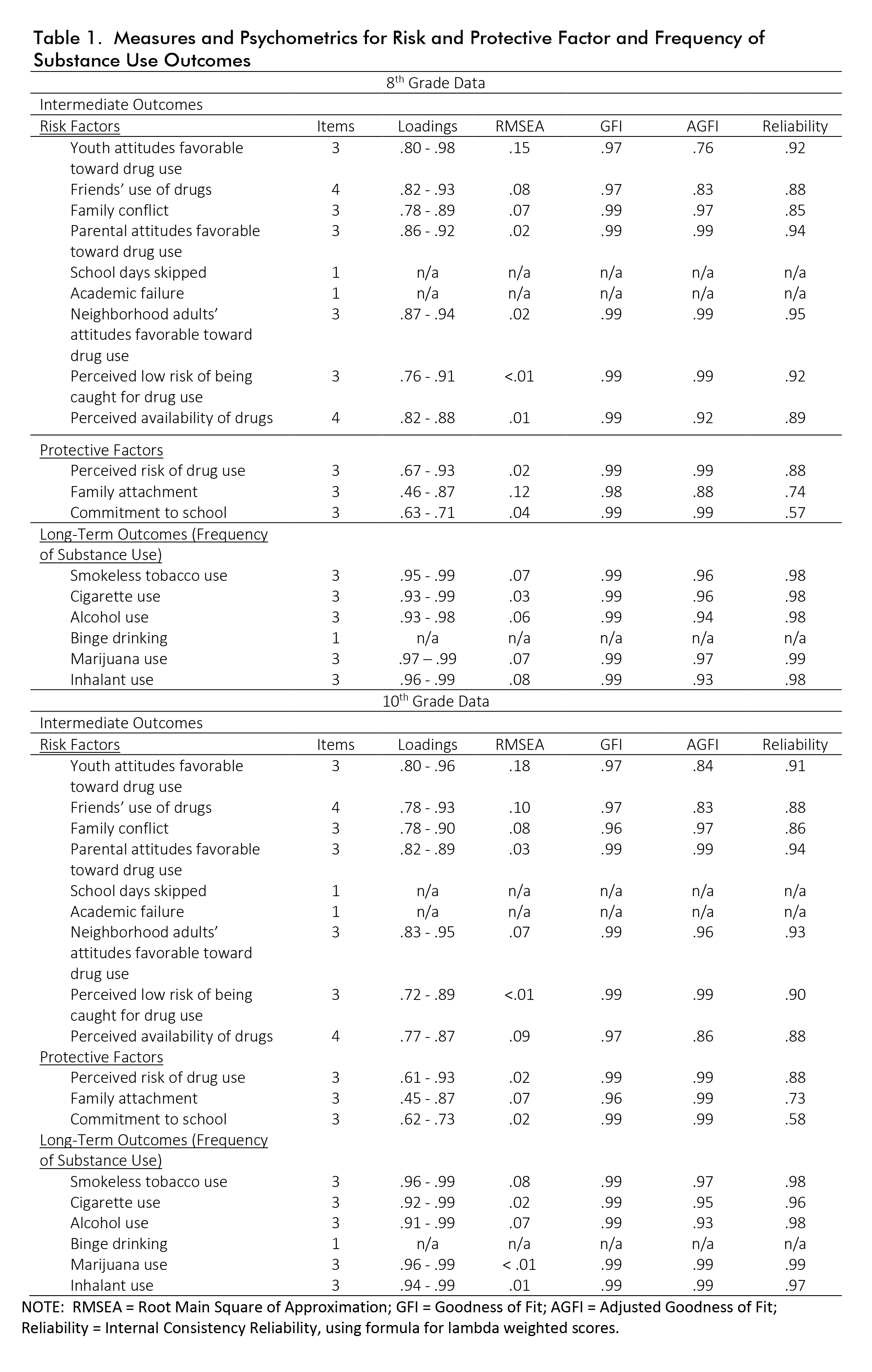

An initial validation study of the KIP Survey instrument items was conducted as part of an early Pacific Institute for Research and Evaluation study of the overall project in 2003.6, and is available upon request. In effect, it replicated studies of reliability and validity that had been conducted on the original Communities that Care (CTC) Youth Survey studies of Hawkins and Catalano (see below). An excerpted table from that study is shown below with reliability estimates for the prevalence and risk/resilience items in Grades 8 and 10 (these items constitute the core elements of the current KIP Survey). The table shows the number of items in each construct, factor loadings, root mean square error of approximation (RMSEA), goodness of fit (GFI), adjusted goodness of fit (AGFI), and internal consistency reliabilities.

Communities that Care Youth Survey (CTCYS)7

As noted previously, the KIP Survey was modeled after the SAMHSA-sponsored Communities that Care Youth Survey (CTCYS) pioneered by Hawkins and Catalano and adopted by SAMHSA for use by states and municipalities.

Communities that Care (CTC) is a community-level prevention approach designed to minimize risk factors associated with problem behaviors and in doing so improve protective factors associated with positive youth outcomes. The CTC model is designed to help community stakeholders and decision makers understand and apply information about risk and protective factors, in conjunction with educating stakeholders on programs that have proven to make a difference in promoting healthy and positive youth development.

As part of this larger initiative, Hawkins, Catalano, and colleagues developed the Communities That Care Youth Survey (CTCYS) in the 1990s to provide sound information to communities engaged in community-level prevention efforts. Originally, the CTCYS was developed from research funded by the Center for Substance Abuse Prevention of the U.S. Department of Health and Human Services. Therefore the items and methods are within the public domain.8 Specifically, the CTCYS was designed to measure: a) risk and protective factors that predict alcohol, tobacco and other drug (ATOD) use, b) delinquency and other problem behaviors in adolescents, c) prevalence and frequency of drug use, and d) frequency of antisocial behaviors.

Over the past 30 years, there has been extensive validation research on the CTCYS. For example, Arthur, et. al. (2002) provided strong evidence of the internal consistency, factor structure, predictive value, and construct validity of risk and protective factor items that came to comprise the survey (now in wide use nationally and internationally). Glaser et.al. (2005) investigated a sample of 172,628 students who participated in the CTCYS administered in seven states in 1998, testing the factor structure of measured risk and protective factors and the equivalence of the factor models across five racial/ethnic groups (African Americans, Asians or Pacific Islanders, Caucasians, Hispanic Americans, and Native Americans), four grade levels (6th, 8th, 10th, and 12th) and male and female gender groups. Results support the construct validity of the survey’s risk and protective factor scales and indicate that the measures are equally reliable across males and females and five racial/ethnic groups.

Monitoring the Future Student Survey

Though the KIP Survey is not modeled directly after Monitoring the Future (MTF), it is very similar in content, covering past-month and past-year substance use, perceptions of risk and disapproval, and perceived ease of access to substances. Many of the substance use measures on the MTF survey have very similar wording to the measures on the KIP Survey. Unlike the CTCYS , MTF is annually administered to a nationally representative sample of 8th, 10th, and 12th graders. MTF survey data are used for national comparison points for many of the measures on the KIP Survey, specifically past-month and past-year use of many substances.

MTF, also known as the National High School Senior Survey, is a longterm epidemiological study that surveys trends in legal and illicit drug use among American adolescents and adults as well as personal levels of perceived risk and disapproval for each drug. The survey is conducted by researchers at the University of Michigan's Institute for Social Research, funded by research grants from the National Institute on Drug Abuse, one of the National Institutes of Health. Survey participants report their drug use behaviors across three time periods: lifetime, past year, and past month. Overall, 43,703 students from 360 public and private schools participated in the 2017 Monitoring the Future survey.

There have literally been hundreds of publications into the psychometric characteristics, measurement capabilities, and findings related to MTF since its inception in 1975. The most recent technical summary of these research findings is published by the Institute for Social Research at the University of Michican and is entitled, “The Monitoring the Future Project after Thirty-Seven Years: Design and Procedures.”9

Youth Risk Behavior Surveillance Survey (YRBSS)10

As the KIP Survey has expanded in scope, efforts to utilize existing measures from nationally representative surveillance surveys have continued, such as the Youth Risk Behavior Surveillance Sytem (YRBSS). The questions that have been added to the KIP Survey from the YRBSS include questions about bullying and cyberbullying, suicidality, and updated measures on certain substances (e.g. synthetic marijuana, heroin).

The YRBSS was developed in 1990 to monitor priority health risk behaviors that contribute markedly to the leading causes of death, disability, and social problems among youth in the United States. YRBSS monitors health-risk behaviors that contribute to the leading causes of death and disability among youth, including: a) behaviors that contribute to unintentional injuries and violence, b) sexual behaviors related to unintended pregnancy and sexually transmitted diseases, c) alcohol and other drug use, d) tobacco use, e) unhealthy dietary behaviors, and f) inadequate physical activity. YRBSS includes a national school-based survey conducted by the CDC and state, territorial, tribal, and local surveys conducted by state, territorial, and local education and health agencies and tribal governments.

The CDC conducted two test-retest reliability studies of the national YRBS questionnaire, one in 1992 and one in 2000. In the first study, the 1991 version of the questionnaire was administered to a convenience sample of 1,679 students in grades 7–12. The questionnaire was administered on two occasions, 14 days apart (22). Approximately three fourths of the questions were rated as having a substantial or higher reliability (kappa = 61%–100%), and no statistically significant differences were observed between the prevalence estimates for the first and second times that the questionnaire was administered. The responses of students in grade 7 were less consistent than those of students in grades 9–12, indicating that the questionnaire is best suited for students in those grades. In the second study, the 1999 questionnaire was administered to a convenience sample of 4,619 high school students. The questionnaire was administered on two occasions, approximately 2 weeks apart11. Approximately one of five questions (22%) had significantly different prevalence estimates for the first and second times that the questionnaire was administered. Ten questions (14%) had both kappas <61% data-preserve-html-node="true" and significantly different time-1 and time-2 prevalence estimates, indicating that the reliability of these questions was questionable. These problematic questions were revised or deleted from later versions of the questionnaire.

No study has been conducted to assess the validity of all self-reported behaviors that are included on the YRBSS questionnaire. However, in 2003, the CDC reviewed existing empiric literature to assess cognitive and situational factors that might affect the validity of adolescent self-reporting of behaviors measured by the YRBSS questionnaire12. In this review, CDC determined that, although self-reports of these types of behaviors are affected by both cognitive and situational factors, these factors do not threaten the validity of self-reports of each type of behavior equally. In addition, each type of behavior differs in the extent to which its self-report can be validated by an objective measure. For example, reports of tobacco use are influenced by both cognitive and situational factors and can be validated by biochemical measures (e.g., cotinine). Reports of sexual behavior also can be influenced by both cognitive and situational factors, but no standard exists to validate the behavior. In contrast, reports of physical activity are influenced substantially by cognitive factors and to a lesser extent by situational ones. Such reports can be validated by mechanical or electronic monitors (e.g., heart rate monitors). Understanding the differences in factors that compromise the validity of self-reporting of different types of behavior can assist policymakers in interpreting data and researchers in designing measures that do not compromise validity.

-

4. Hawkins, J. D., Catalano, R. F., & Arthur, M. W. (2002). Promoting science-based prevention in communities. Addictive behaviors, 27(6), 951-976.

5. Jason, L. A., Curie, C. J., Townsend, S. M., Pokorny, S. B., Katz, R. B., & Sherk, J. L. (2002). Health promotion interventions. Child & family behavior therapy, 24(1-2), 67-82.

6. KIP Survey Research Monograph conducted by Pacific Institue for Research and Evaluation (Collins, Johnson, & Becker (2003)

7. Arthur, M. W., Briney, J. S., Hawkins, J. D., Abbott, R. D., Brooke-Weiss, B. L., & Catalano, R. F. (2007). Measuring risk and protection in communities using the Communities That Care Youth Survey. Evaluation and program planning, 30(2), 197-211.

Arthur, M. W., Hawkins, J. D., Pollard, J. A., Catalano, R. F., & Baglioni Jr, A. J. (2002). Measuring risk and protective factors for use, delinquency, and other adolescent problem behaviors: The Communities That Care Youth Survey. Evaluation review, 26(6), 575-601.

Briney, J. S., Brown, E. C., Hawkins, J. D., & Arthur, M. W. (2012). Predictive validity of established cut points for risk and protective factor scales from the Communities That Care Youth Survey. The journal of primary prevention, 33(5-6), 249-258.

Glaser, R. R., Van Horn, M. L. V., Arthur, M. W., Hawkins, J. D., & Catalano, R. F. (2005). Measurement properties of the Communities That Care® Youth Survey across demographic groups. Journal of Quantitative Criminology, 21(1), 73-102.

Hawkins, J. D., Catalano, R. F., Arthur, M. W., Egan, E., Brown, E. C., Abbott, R. D., & Murray, D. M. (2008). Testing communities that care: The rationale, design and behavioral baseline equivalence of the community youth development study. Prevention Science, 9(3), 178.

9. Bachman, J. G., Johnston, L. D., O'Malley, P. M., & Schulenberg, J. E. (2006). The Monitoring the Future Project After Thirty-Two Years: Design and Procedures. Monitoring the Future Occasional Paper 64. Online Submission.

Johnston, L. D., O’Malley, P. M., & Bachman, J. G. (1999). National survey results on drug use from the Monitoring the Future study, 1975-1998. Volume I: Secondary school students.

10. Brener ND, Collins JL, Kann L, Warren CW, Williams BI. Reliability of the Youth Risk Behavior Survey questionnaire. Am J Epidemiol 1995;141:575–80.

Brener ND, Kann L, McManus T, Kinchen SA, Sundberg EC, Ross JG. Reliability of the 1999 Youth Risk Behavior Survey questionnaire. J Adolesc Health 2002;31:336 42. Validation studies summarized in: https://www.cdc.gov/mmwr/pdf/rr/rr6201.pdf

Gast, J., Caravella, T., Sarvela, P. D., & McDermott, R. J. (1995). Validation of the CDC's YRBBS alcohol questions. Health Values: The Journal of Health Behavior, Education & Promotion, 19(2), 38-43.

11. Brener ND, Kann L, McManus T, Kinchen SA, Sundberg EC, Ross JG (2002). Reliability of the 1999 Youth Risk Behavior Survey questionnaire. J Adolesc Health, 31, 336–42.

12. Brener ND, Billy JOG, Grady WR. Assessment of factors affecting the validity of self-reported health-risk behavior among adolescents: evidence from the scientific literature (2003). J Adolesc Health, 33, 436–57.